Last updated: June 17, 2023 11:22 AM (All times are UTC.)

June 01, 2030

Recent & Upcoming Talks by John Sear (@DiscoStu_UK)

June 16, 2023

Don’t ask me later by James Nutt (@zerosumjames)

This is just a minor vent, but why are notification choices so often just:

- Yes

- Not right now, but please keep asking me

“No, please don’t ask again” is a frustratingly rare option. I know, I know. Notifications drive engagement and you’re incentivised to keep pestering me until I give in. I empathise with your situation.

However, I do wish you’d stop.

June 09, 2023

Reading List 305 by Bruce Lawson (@brucel)

- FCC Requires Video Conferencing Accessibility & Proposes ASL Support – yay.

- A Heart-felt Apology and a Chance to Start Again from AccessiBe, overlay maker. Hurray!

- Meanwhile, AudioEye Is Suing Me – totally definitely NOT an overlay maker, AudioEye, is suing Adrian Roselli. Boooo.

- Overlays – Web Accessibility for Business Owners Dan Payne has a soothing voice and doesn’t swear or thump the table when explaining why accessibility overlays aren’t the panacea they often pretend to be.

- Getting started with View Transitions on multi-page apps – “It took less than an hour to do, requires zero JavaScript, and two lines of CSS. I’m pleased with the results”. Progressive enhancement with declarative code – real web developers love to see it!

- Amazon Luna will drop Windows and Mac apps as it ‘doubles down’ on web app – “We saw customers were spending significantly more time playing games on Luna using their web browsers than on native PC and Mac apps. When we see customers love something, we double down. We optimized the web browser experience with the full features and capabilities offered in Luna’s native desktop apps”

- Talking of which, News from WWDC23: WebKit Features in Safari 17 beta – “Web apps are coming to Mac”. No news of the View Transitions spec or meaningful browser choice on iThings.

- Web Apps on macOS Sonoma 14 Beta – some tech notes by Thomas Steiner

- A series of hands‐on guides to assitive technology and accessibility testing

- 11 HTML best practices for login & sign-up forms – includes the evergreen “All clickables should use button or a, not div or span”. Sadly, there are people allowed within 14 miles of the front end who still need to be told this.

- Fieldsets, Legends and Screen Readers again – Steve “Chuckles” Faulkner updated his 15 year old blog post.

- Semantics and the popover attribute: what to use when? – Hidde on the new HTML attribute named after one of my favourite Soviet constructivist artists, Lyubov Sergeyevna Popova (Любо́вь Серге́евна Попо́ва). I like things like popover, dialog, details and summary – they’re common pains for people who use keyboards or assistive tech, and have often prompted “Full-stack” developers (as opposed to Web Developers) to drag in libraries and frameworks in order to implement them.

- The Industrial Hammer Complex – What we actually have is an industrial complex bent on manufacturing only hammers, committed to convincing you that only nails exist, and motivated to sell you a lifetime hammer subscription!” I recently investigated a page that had different spinners for each block (header, footer, nav – because MiCrOsErViCeS!!!). It preloaded loads of WebRTC framework, OAuth shit (because SPAs are gReAT!!) and an SVG logo (that contained a 1.6MB bitmap). All this, to show a login form, which took 45 secs over 3G.

- Boring Report is an app that aims to remove sensationalism from the news. In today’s world, catchy headlines and articles often distract readers from the actual facts and relevant information.” Using AI, Boring Report processes exciting news articles and transforms them so readers focus on the essential details and minimises the impact of sensationalism.

- Google’s Open Source AI Tool Is a Major Step Forward for Accessibility in Gaming – “Project Gameface uses your head and facial movements to control a mouse cursor.”

- US Agency Releases Free Stock Photos of People With Disabilities

- When digital nomads come to town -“Cities from Canggu to Medellín are welcoming tech workers, but locals complain they’re being priced out. The digital nomads’ visits are transitory, but they leave neighborhoods permanently transformed. Today, there are streets in Medellín, as in Mexico City or Canggu, that look more like Bushwick — where English is more common than the local language. Building exteriors retain their historic character, but interiors converge to a sterile homogeneity of hotdesking, free charging outlets, affordable coffee, and Wi-Fi with purchase.”

- Rishi Sunak alt text tweet criticised for misusing accessibility feature – #AltTextGate

- Kenny Log In – “GENERATE A SECURE PASSWORD FROM THE LYRICS OF AMERICA’S GREATEST SINGER SONGWRITER”

June 05, 2023

CENTRAL BIRMINGHAM 2040: Shaping Our City Together (unofficial accessible version) by Bruce Lawson (@brucel)

My chum Stuart is a civic-minded sort of chap, so he drew my attention to Birmingham’s strategic plan for 2040. There’s a lot to be commended in the plan’s main aims (although it’s a little light on detail, but that’s a ‘strategy’, I guess). However, we noticed that it was hard to find on the Council website (subsequently rectified and linked from the cited page).

I was also a bit grumpy that it is circulated as a 43 MB PDF document, which is a massive download, especially for poorer members of the population who are more likely to be using phones than a desktop computer (PDF, lol), and more likely to have pay-as-you-go data plans (PDF, ROFL) which are more expensive per megabyte than contracts.

PDFs are designed for print so don’t resize for phone screens, requiring tedious horizontal scrolling–potentially a huge barrier for some people with disabilities, and a massive pain in the arse for everyone. For people who don’t read English well, PDFs are harder for translation software to access, so I’ve made an accessible HTML version of the Shaping Our City Together document.

I haven’t included the images, which are lovely but heavy, for two reasons. The first is that many are created by someone called Tim Cornbill and I don’t want to infringe their copyright. Some of the illustrations are captioned “This concept image is an artist’s impression to stimulate discussion, it does not represent a fixed proposal or plan”, so I decided they were not content but presentational and therefore unnecessary.

Talking of copyright, the document is apparently Crown Copyright. Why? I helped pay for it with my Council tax. Furthermore, I am warned that “Unauthorised reproduction infringes Crown Copyright and may lead to prosecution or civil proceedings”, so if Birmingham Council want me to take this down, I will. But given that the report talks glowingly of the contribution made to the city’s history by The Poors and The Foreigns, it seems a bit remiss to have excluded them from a consultation about the City’s future.

Because I am not a designer, the page is lightly laid out with Alvaro Montoro’s “Almond CSS” stylesheet. I am, however, an accessibility consultant. The Council could hire me to sort out more of their documents (so could you!).

May 31, 2023

State of the Browser 2022 video: IE6 – RIP or BRB by Bruce Lawson (@brucel)

Here’s the video of my talk.

The slides are available.

My White Whale by Luke Lanchester (@Dachande663)

I have a project, codenamed Ox currently, that has been consuming me for the past seven years.

It’s not a difficult project, not when I know what I want out of it. Like dealing with any client though, the hard part is knowing what I want out of it.

It’s supposed to be a… space, for me. Track scrobbles, games played, photos taken and where, people, friends, control lights, last forever, be available everywhere but only locally.

I have gone through iteration after iteration, oftentimes using it as an excuse to spelunk into some deep dark corner of technology to my lizard brain.

- Static files with a CLI tool to parse and extract

- Golang, rust, electron desktop GUIs

- SQLite data replicated via CRDTs

- Docker, firecracker, wasm engines to execute simple scripts

- A simple PHP & MySQL website

Everytime, I find a reason not to continue. I am on version 44. I passed the meaning of life and still can’t sleep because I think of another angle.

I know the solution is to write a spec req doc.

I know the solution is to split this out into tool A, tool B, tool C.

I know the solution is to use off-the-shelf tools.

But I don’t know.

May 25, 2023

Every bunch of tech hippies needs a touch of Bruce™ by Bruce Lawson (@brucel)

Since my last employer decided just before Xmas that it didn’t need an accessibility team any more, I’ve been sporadically applying for jobs, between some freelance work and finishing the second album by the cruellest months (give it a listen while you read on!).

Two days ago I was lucky enough to receive two rejection letters from acronym-named corporations. Both were received only a couple of working hours after I eventually hit submit in their confusing (and barely-accessible) third-party job application portals, which makes me suspicious of their claims to have “carefully considered” my application. One rejection, I suspect, was because I’d put too large a figure in the ‘expected salary’ box; how am I supposed to know, when the advertised salary is “competitive”? In retrospect, I should have just said typed “competitive” into the box.

The second rejection reason is a little harder to discern. As I exceeded all the criteria, I suspect admitting to the crimes of being over 50 and mildly disabled worked against me. But I’ll never know; no feedback was offered, and both auto-generated emails came from a no-reply email address. (Both orgs make a big deal on their sites about valuing people etc. Weird.)

Apart from annoyance at the time I wasted (I have blogposts to write, and songs to record!) I remembered that I hate acronymy corporate jobs anyway. So, if you need someone on a short-term/ part-time (or long-term) basis to help educate your team in accessibility, evaluate your project and suggest improvements, give me a yell. In answer to a question on LinkTin, I’ve listed the accessibility and web standards services I offer.

Mates’ Rates if you’re a non-acronymy small Corp who are actively trying to make the world better rather than merely maximise shareholder value. Remember: every bunch of tech hippies needs a touch of Bruce .

.

May 15, 2023

I’ve released my second album “High Priestess’ Songs” by the cruellest months, the name I’ve given to the loose collaboration between me and assorted friends to record and release my songs.

High Priestess' Songs by the cruellest months

Please, give it a listen and consider buying it for £5 so I can buy a pint while I’m looking for a new job.

(Last Updated on )

May 12, 2023

Reading List 304 by Bruce Lawson (@brucel)

- The Fugu project: priorities, Mozilla and Apple, support realms, Web vs native, and future plans – Vadim and I interrogate Thomas Steiner on Te F-word

- Scoped CSS is back (in Chromium, behind a flag). Great news for ending CSS-in-JS abominations.

- Add the WAI-ARIA Usage bookmarklet to evaluate document conformance requirements for use of ARIA attributes in HTML and allowed ARIA roles, states, and properties.

- Meeting WCAG Level AAA – Pattypoo cascades his wisdom

- The ongoing defence of frontend as a full-time job

– “You can hire frontend developers to build your product, or make it happen somehow and later on hire performance and accessibility consultants to fix what doesn’t work properly.” – @codepo8 on why “frontend developer” is a real, and vital, specialism. - Scaling up the Prime Video audio/video monitoring service and reducing costs by 90% – The move from a distributed microservices architecture to a monolith application helped achieve higher scale, resilience, and reduce costs.

- How to use AI to do practical stuff: A new guide – “People often ask me how to use AI. Here’s an overview with lots of links.”

- Small Penises and Fast Cars: Evidence for a Psychological Link – “We found that males, and males over 30 in particular, rated sports cars as more desirable when they were made to feel that they had a small penis”. Thanks, Science!

April 21, 2023

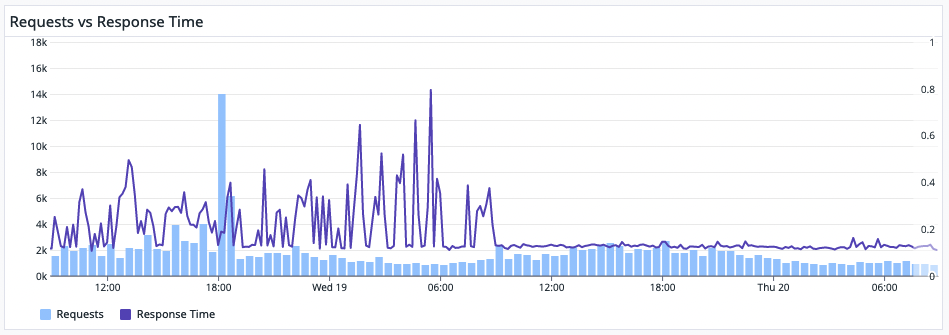

Response Time by Luke Lanchester (@Dachande663)

Feels good.

April 14, 2023

Reading List 303 by Bruce Lawson (@brucel)

Some interesting stuff I’ve been reading lately. Don’t forget, I’m available for part-time accessibility / web standards consultancy to help any organisation that isn’t making the world worse (and better rates for orgs that are actively trying to make the world better).

- Safari releases are development hell – “We make the browser-based game creation app Construct … I wanted to share our experience so customers, developers, regulators, and Apple themselves can see what we go through with what is supposed to be a routine Safari release.”

- Modern Font Stacks – “System font stack CSS organized by typeface classification for every modern OS. The fastest fonts available. No downloading, no layout shifts, no flashes — just instant renders.”

- The Most Dangerous Codec in the World: Finding and Exploiting Vulnerabilities in H.264 Decoders – head-hurtingly detailed PDF.

- People do use Add to Home Screen – Mozilla did some user testing. “four of ten people in a user test – what does that tell us? It tells us that it’s something that at least some regular people do and that it’s not a hidden power user feature”.

- The Automation Charade The rise of the robots has been greatly exaggerated. Whose interests does that serve?

- AI and the American Smile – How AI misrepresents culture through a facial expression

- Floor796 “is an ever-expanding animation scene showing the life of the 796th floor of the huge space station! The goal of the project is to create as huge animation as possible, with many references to movies, games, anime and memes”. Drag around and click to find out more.

April 06, 2023

Sonnet 2 by James Nutt (@zerosumjames)

Through PHP and Java, skills were born

But settled now on Ruby, life’s true love.

To not look down on past projects with scorn

But elevate my craft and look above.

O, Ruby! A Bridgetown site could not hold

My love for thee, inside of markdown lines.

A fresh Rails application could not load.

Your name in the Gemfile - a thousand times.

Metaprogramming a new DSL

With nested blocks and elegant syntax

Cannot capture the rush of my heart’s swell

For all the language holds, still my tongue lacks.

Ruby 3, YJIT too, and also minitest

Static sites and CLIs! Ruby, you’re the best.

April 04, 2023

Dungeons & Dragons: Honour Among Thieves, a review by Stuart Langridge (@sil)

So, Dungeons & Dragons: Honour Among Thieves, which I have just watched. I have some thoughts. Spoilers from here on out!

Up front I shall say: that was OK. Not amazing, but not bad either. It could have been cringy, or worthy, and it was not. It struck a reasonable balance between being overly puffed up with a sense of epic self-importance (which it avoided) and being campy and ridiculous and mocking all of us who are invested in the game (which it also avoided). So, a tentative thumbs-up, I suppose. That’s the headline review.

But there is more to be considered in the movie. I do rather like that for those of us who play D&D, pretty much everything in the film was recognisable as an actual rules-compliant thing, without making a big deal about it. I’m sure there are rules lawyers quibbling about the detail (“blah blah wildshape into an owlbear”, “blah blah if she can cast time stop why does she need some crap adventurers to help”) but that’s all fine. It’s a film, not a rulebook.

I liked how Honour Among Thieves is recognisably using canon from an existing D&D land, Faerûn, but without making it important; someone who doesn’t know this stuff will happily pass over the names of Szass Tam or Neverwinter or Elminster or Mordenkainen as irrelevant world-building, but that’s in there for those of us who know those names. It’s the good sort of fanservice; the sort that doesn’t ruin things if you’re not a fan.

(Side notes: Simon is an Aumar? And more importantly, he’s Simon the Sorcerer? Is that a sly reference to the Simon the Sorcerer? Nice, if so. Also, I’m sure there are one billion little references that I didn’t catch but might on a second or third or tenth viewing, and also sure that there are one billion web pages categorising them all in exhaustive detail. I liked the different forms of Bigby’s Hand. But what happened to the random in the gelatinous cube?)

And Chris Pine is nowhere near as funny as he thinks he is. Admittedly, he’s playing a character, and obviously Edgin the character’s vibe is that Edgin is not as funny as he thinks he is, but even given that, it felt like half the jokes were delivered badly and flatly. Marvel films get the comedy right; this film seemed a bit mocking of the concept, and didn’t work for me at all.

I was a bit disappointed in the story in Honour Among Thieves, though. The characters are shallow, as is the tale; there’s barely any emotional investment in any of it. We’re supposed, presumably, to identify with Simon’s struggles to attune to the helmet and root for him, or with the unexpectedness of Holga’s death and Edgin and Kira’s emotions, but… I didn’t. None of it was developed enough; none of it made me feel for the characters and empathise with them. (OK, small tear at Holga’s death scene. But I’m easily emotionally manipulated by films. No problem with that.) Similarly, I was a bit annoyed at how flat and undeveloped the characters were at first; the paladin Xenk delivering the line about Edgin re-becoming a Harper with zero gravitas, and the return of the money to the people being nowhere near as epically presented as it could have been.

But then I started thinking, and I realised… this is a D&D campaign!

That’s not a complaint at all. The film is very much like an actual D&D game! When playing, we do all strive for epic moves and fail to deliver them with the gravitas that a film would, because we’re not pro actors. NPCs do give up the info you want after unrealistically brief persuasion, because we want to get through that quick and we rolled an 18. The plans are half-baked but with brilliant ideas (the portal painting was great). That’s D&D! For real!

You know how when someone else is describing a fun #dnd game and the story doesn’t resonate all that strongly with you? This is partially because the person telling you is generally not an expert storyteller, but mostly because you weren’t there. You didn’t experience it happening, so you missed the good bits. The jokes, the small epic moments, the drama, the bombast.

That’s what D&D: Honour Among Thieves is. It’s someone telling you about their D&D campaign.

It’s possible to rise above this, if you want to and you’re really good. Dragonlance is someone telling you about their D&D campaign, for example. Critical Role can pull off the epic and the tragic and the hilarious in ways that fall flat when others try (because they’re all very good actors with infinite charisma). But I think it’s OK to not necessarily try for that. Our games are fun, even when not as dramatic or funny as films. Honour Among Thieves is the same.

I don’t know if there’s a market for more. I don’t know how many people want to hear a secondhand story about someone else’s D&D campaign that cost $150m. This is why I only gave it a tentative thumbs-up. But… I believe that the film-makers’ attempt to make Honour Among Thieves be like actual D&D is deliberate, and I admire that.

This game of ours is epic and silly and amateurish and glorious all at once, and I’m happy with that. And with a film that reflects it.

March 28, 2023

“Whose web is it, anyway?” My axe-con talk by Bruce Lawson (@brucel)

The nice people at Deque systems asked me to kick off the developer track of their Axe Conference 2023. You can view the design glory of my slides (and download the PDF for links etc). Here’s the subtitled video.

(We’ll ignore the false accusation in the closed captions that I was speaking *American* English.)

I generated the transcript using the jaw-droppingly accurate open-source Whisper AI. Eric Meyer has instructions on installing it from the command line. Mac users can buy a lifetime of updates to MacWhisper for less than £20, which I’ve switched to because the UI is better for checking, correcting and exporting (un-timestamped) transcripts like we use for our F-word podcast (full of technical jargon, with a Englishman, a Russian and even an American speaking English with their own accents). This is what Machine Learning is great for: doing mundane tasks fast and better, not pirating other people’s art to produce weird uncanny valley imagery. I’m happy to welcome my robot overlords if they do drudgery for me.

March 27, 2023

Making Things by Luke Lanchester (@Dachande663)

I have hit a block. Not a writing block, not per-se. More of a mental staircase of ascending excuses. I just can’t make things anymore for myself.

I used to love bashing out small scripts in various languages. Sometimes to scratch an itch in learning something new. Sometimes just to fix a pain point elsewhere. These scripts were… horrible. But they ran. They worked. Often they didn’t have tests, but you could grok the entire codebase in two scrolls of any IDE.

Nowadays, I do most of my day-to-day work been paid to write large systems. Enterprise auth, backend orchestration. Everything is specced, tested, deployed and versioned. This gives me great confidence in what we’re shipping.

But then, these two worlds collide and I end up… doing nothing.

I wanted to make a tiny photo library script to just read some shares off of a NAS and show me pictures taken on that day in years gone by. But all of a sudden I was setting up Dockerfiles and dependencies, working out if VIPS was better that imagemagick.

I couldn’t just query a DB, I had to design a schema with type-hinting and support for migrations later on, and what if I wanted to share an image outside the network or $DIETY forbid, share the application with others.

Where do I land on the spectrum of a one-file Python or PHP or whatever script that just scrapes a glob exec command’s output, and setting up a k3s cluster with a rust-build toolchain that analyzes everything and has full support for expanding into anything and everything.

I want to solve problems. That’s why I became a dev. Not to become an architecture astronaut or sit infront of a terminal window paralysed about making the wrong choice.

Frog Porridge by James Nutt (@zerosumjames)

1.312723258290198, 103.87928286812601

There’s a productivity technique called “Eat the Frog.” As I understand it, the idea is this: every day, you start with the most challenging task on your list (the frog) and you do that first (eat it). This feeling of accomplishment will either carry you breezily through several more tasks, or you take the rest of the day off, but can rest happily in the knowledge that you’ve got at least one big thing less to worry about. It sounds like a sensible system. If the idea behind “eat the frog” is based on the idea that frogs are difficult or unpleasant to eat, however, then it’s time for it to be renamed.

On a street corner in Singapore, a tornado of staff ferries bowls of frog porridge and an assortment of other dishes to small plastic tables. An open kitchen and relatively bare surroundings, the overall impression is less of a restaurant, but rather buying food directly from the foreman of a busy factory floor. A woman with carefully manicured and bejewelled nails, in contrast to almost everything else within eyesight, takes our money and seems to telepathically transmit our order to the small army of cooks in the background, who all the while have been stirring and ladling.

With a few particular exceptions, I’ll try to eat almost anything once. Most things I’ll eat as often as I can get my hands on. Still, I’d never eaten frog porridge before and it’s normal to be a little apprehensive about the unknown. Will it be slimy or crispy? Do frogs… have bones? Will they be diced, or a sort of paste, or a complete set of legs, lifted directly from a children’s comic book? In other words, will it be recognisably froggy?

We ordered one bowl of porridge with three spicy frogs to go, killed twenty minutes wandering the neighbourhood, circled back, and picked them up. More or less what one might expect. Small chunks of soft and slightly chewy meat in an extremely rich, salty, dark brown sauce. Visibly meaty, but you’d be hard pressed to say which animal just by looking. All sat atop a delicious bowl of rice porridge.

Between the unflagging love for aircon and what struck me as uncharacteristically cold weather, I’d somehow spent most of the trip being cold. The weather app was telling me it was 28°C, which I doubted. Further down the page, it tells me that it “feels like 32.” The app, perhaps as surprised as I am at the cold, was doing its best to deny reality. I thought about the jacket that I’d left at home, a puffy bomber that would probably shoot me from too cold straight to being too warm. I’ve managed to cunningly ensure that I can be uncomfortable in every situation. Busines as usual. At least the porridge was good.

March 24, 2023

Reading List 302 by Bruce Lawson (@brucel)

- Checking out and building Chromium for iOS – yup, you heard that right: real Chromium on iOS.

- The new HTML <search> element means a native way to let Assistive Tech what something is without

role="Search" - How Shadow DOM and accessibility are in conflict by Alice Boxhall

- Unicode Roman Numerals and Screen Readers by Uncle Tezza

- Building an Accessibility Library Give UX designers the tools to build products everyone can use.

- GitHub Copilot investigation – “We’re investigating a potential lawsuit against GitHub Copilot for violating its legal duties to open-source authors and end users”

- Building Accessible React Applications: Best Practices for Senior Engineers – this is all good advice. But why best practices for *Senior* engineers? If you aren’t doing these things, you ain’t an engineer.

- Why is Apple making big improvements to web apps for iPhone? Apple seems to be working on features that make web applications a little more like native apps. Now, why would Apple want to do that?

- What WCAG 2.2 Means for Native Mobile Accessibility

- Learn Privacy – A course to help you build more privacy-preserving websites, by Stuart Langridge

- Dumb Password Rules – A compilation of 292 sites with dumb password rules.

- The Jennifer Mills News – I love this: for the last 20 years, Jennifer Mills has been reporting the mundane things in her life in the style of an old-time regional newspaper, every Friday

March 07, 2023

Diagnosing “early termination of worker” errors by James Nutt (@zerosumjames)

You might be trying to start your Rails server and getting something like the following, with no accompanying stack trace to tell you exactly what it is that’s gone wrong.

[10] Early termination of worker

[8] Early termination of worker

This is a note-to-self after encountering this when trying to upgrade a Rails app from Ruby 2.7 to 3.2. What helped me was a comment from this Stack Overflow post.

If rails s or bundle exec puma is telling you the workers are being terminated but not telling you why, try:

rackup config.ru

February 17, 2023

Reading List 301 by Bruce Lawson (@brucel)

- Link o’The Week: The Market for Lemons – Big Al Russell on how managers and developers have come to believe -against all evidence- that massive frameworks are better for making web things.

- A Historical Reference of React Criticism Over 8 years worth of people pointing out the performance calamity that is React, curated by Zach Leatherman

- Why I’m not the biggest fan of Single Page Applications – to do them well you have to reimplement lots of things browsers do by defaults in normal web pages. Hopefully when the View Transitions API comes to multi page apps we’ll square the circle

- Container queries land in stable browsers – a short tutorial by Una Kravets

- I just wanted to buy pants How excessive JavaScript is costing you money

- The case for frameworks – Laurie Voss makes the case for React. It’s well-argued, but I’m unconvinced everybody has considered the downsides and balanced the risks. Everyone I consult with seems shocked at the performance and accessibility nasties of many of the popular frameworks.

- CSS lazy loading is broken in Safari by Vadim Makeev

- The (extremely) loud minority – Andy Bell, a Northerner, gets righteously grumpy again. I’m worried about the lad’s blood pressure, to be honest. Have a pontefract cake, mate, and take the weight of the world off your whippet.

- Nintendo to increase wages 10% despite lowered forecast – “It’s important for our long-term growth to secure our workforce” president Shuntaro Furukawa said

- Microsoft to phase out Internet Explorer with newer Edge browser – An update will begin to replace its 27-year-old predecessor from Tuesday. Farewell, old frenemy.

February 13, 2023

Clog by Luke Lanchester (@Dachande663)

There was recently a discussion on Hacker News around application logging on a budget. At work I’ve been trying to keep things lean, not to the point of absurdity, but also not using a $100 or $1000/month setup, when a $10 one will suffice for now. We settled on a homegrown Clickhouse + PHP solution that has performed admirably for two years now. Like everything, this is all about tradeoffs, so here’s a top-level breakdown of how Clog (Clickhouse + Log) works.

Creation

We have one main app and several smaller apps (you might call them microservices) spread across a few Digital Ocean instances. These generate logs from requests, queries performed, exceptions encountered, remote service calls etc. We use monolog in PHP and just a standard file writer elsehwere to write new-line delimited JSON to log files.

In this way, there is no dependency between applications and the final logs. Everything that follows could fail, and the apps are still generating logs ready for later collection (or recollection).

Collection

On each server, we run a copy of filebeat. I love this little thing. One binary, a basic YAML file, and we have something that watches our log files, adds a few bits of extra data (the app, host, environment etc), and then pushes each line into a redis queue. This way our central logging instance doesn’t need to have any knowledge of each of the instances which can come and go.

(Weirdly, filebeat is part of elastic so can be used as part of your normal ELK stack, meaning if we wanted to change systems later, we have a natural inflection point.)

There’s definitely bits we could change here. Checking queue length, managing backpressue, etc. But do you know what? In 24 months of running this in production, ingesting between 750K to 1M logs a day, none of that has actually been a problem. Will it be a problem when we hit 10M or 100M logs a day? Sure. But then we have a different set of resources to hand.

Ingesting

We now have a redis queue with a queue of JSON log lines. Originally this was a redis server running on the clog instance, but we later started using a managed redis server for other things so migrated this to. Our actual Clog instance is a 4GB DO instance. That’s it. Initially it was a 2GB (which was $10), so I don’t think we’re too far off the linked HN discussion.

The app to read the queue and add to Clickhouse is… simple. Brutally simple. Written in PHP using the PHP Redis extension in an afternoon, it runs BLPOP in an infinite loop to take an entry, run some very basic input processing (see next), and insert it into Clickhouse.

That processing is the key to how this system stays (fairly) speedy and is 100% not my idea. Uber is one of the first I could find who detailed how splitting up log keys from log values can make querying much more efficient. Combined with materialized views, we can get something very robust that will handle 90% of things we throw at it

Say we have a JSON log like so:

{

"created": "2022-12-25T13:37:00.12345Z",

"event_type": "http_response",

"http_route": "api.example"

}This is turned into a set of keys and values based on type:

"datetime_keys": ["created"],

"datetime_values": [DateTime(2022-12-25T13:37:00.12345Z)],

"string_keys": ["event_type", "http_route"],

"string_values": ["http_response", "api.example"]Our clickhouse logs table is:

- Partitioned by log created date;

- Has some top-level columns for things we’ll always have like application name, environment, etc;

- Array-based string columns for the *_keys columns;

- Array-based type-specific columns the *_values columns;

- A set of materialized views for pre-defined columns e.g.

matcol_event_type String MATERIALIZED string_values[indexOf(string_keys, 'event_type')]. This pulls out the value of event_type, and creates a virtual column that is stored. This makes queries for these columns much quicker. - A data retention policy to automatically remove data after 180 days.

This isn’t perfect. Not by a long shot. But it means we’ve been able to store our logs and just… not worry about costs spiralling out of control. A combination of a short retention time, Clickhouse’s in-built compression, and just realising that most people aren’t going to be generating TBs of logs a day, means we’ve flown by with this system.

Querying & Analysing

Querying is, again, very simple. Clickhouse offers packages for most languages, but also supports MySQL (and other) interfaces. We already have a back-office tool (in my experience, one of the first things you should work on), that makes it drop-dead simple to add a new screen and connect it to Clickhouse.

From there we can list logs with basic filters and facets. The big advantage I’ve found here over other log-specific tools is we can be a bit smart and link back into the application. For example, if a log includes a “auth_user_id” or “requested_entity_id”, we can link this to an existing information page in our back-office automatically.

Conclusions

There are definitely rough edges in Clog. A big one is that it’s simply an internal tool which means existing knowledge of other tools is lost. Some of the querying and filtering can definitely use some UX love. The alerts are hard-coded. And more.

But, in the two plus years we’ve been using Clog it has cost us a couple hundred dollars and all told, a day or two of my time, and in return saved us an order of magnitude more when pricing up hosted cloud options. This has given us a much longer runway.

I 100% wouldn’t recommend DIY NIH options for everything, but I Clog has paid off for what we needed.

February 12, 2023

On association by Graham Lee

My research touches on the professionalisation (or otherwise) of software engineering, and particularly the association (or not) of software engineers with a professional body, or with each other (or not) through a professional body. So what’s that about?

In Engagement Motivations in Professional Associations, Mark Hager uses a model that separates incentives to belong to a professional association into public incentives (i.e. those good for the profession as a whole, or for society as a whole) and private incentives (i.e. those good for an individual practitioner). Various people have tried to argue that people are only motivated by the private incentives (i.e. de Tocqueville’s “enlightened self-interest”).

Below, I give a quick survey of the incentives in this model, and informally how I see them enacted in computing. If there’s a summary, it’s that any idea of professionalism has been enclosed by the corporate actors.

Public incentives

Promoting greater appreciation of field among practitioners

My dude, software engineering has this in spades. Whether it’s a newsletter encouraging people to apply formal methods to their work, a strike force evangelising the latest programming language, or a consultant explaining that the reason their methodology is failing is that you don’t methodologise hard enough, it’s not incredibly clear that you can be in computering unless you’re telling everyone else what’s wrong with the way they computer. This is rarely done through formal associations though: while the SWEBOK does exist, I’d wager that the fraction of software engineers who refer to it in their work is 0 whatever floating point representation you’re using.

Ironically, the software craftsmanship movement suggests that a better way to promote good practice than professional associations is through medieval-style craft guilds, when professional associations are craft guilds that survived into the 20th century, with all the gatekeeping and back-scratching that entails.

Promoting public awareness of contributions in the field

If this happens, it seems to mostly be left to the marketing departments of large companies. The last I saw about augmented reality in the mainstream media was an advert for a product.

Influencing legislation and regulations that affect the field

Again, you’ll find a lot of this done in the policy departments of large companies. The large professional societies also get involved in lobbying work, but either explicitly walk back from discussions of regulation (ACM) or limit themselves to questions of research funding. Smaller organisations lobby on single-issue platforms (e.g. FSF Europe and the “public money, public code” campaign; the Documentation Foundation’s advocacy for open standards).

Maintaining a code of ethics for practice

It’s not like computering is devoid of ethics issues: artificial intelligence and the world of work; responsibility for loss of life, injury, or property damage caused by defective software; intellectual property and ownership; personal liberty, privacy, and data sovereignty; the list goes on. The professional societies, particularly those derived from or modelled on the engineering associations (ACM, IEEE, BCS), do have codes of ethics. Other smaller groups and individuals try to propose particular ethical codes, but there’s a network effect in play here. A code of ethics needs to be popular enough that clients of computerists and the public know about it and know to look out for it, with the far extreme being 100% coverage: either you have committed to the Hippocratic Oath, or you are not a practitioner of medicine.

Private incentives

Access to career information and employment opportunities

If you’re early-career, you want a job board to find someone who’s hiring early career roles. If you’re mid or senior career, you want a network where you can find out about opportunities and whether they’re worth pursuing. I don’t know if you’ve read the news lately, but staying employed in computering isn’t going great at the moment.

Opportunities to gain leadership experiences

How do you get that mid-career role? By showing that you can lead a project, team, or have some other influence. What early-career role gives you those opportunities? crickets Ad hoc networking based on open source seems to fill in for professional association here: rather than doing voluntary work contributing to Communications of the ACM, people are depositing npm modules onto the web.

Access to current information in the field

Rather than reading Communications of the ACM, we’re all looking for task-oriented information at the time we have a task to complete: the Q&A websites, technology-specific podcasts and video channels are filling in for any clearing house of professional advancement (to the point where even for-profit examples like publishing companies aren’t filling the gaps: what was the last attempt at an equivalent to Code Complete, 2nd Edition you can name?). This leads to a sort of balkanisation where anyone can quickly get up to speed on the technology they’re using, and generalising from that or building a holistic view is incredibly difficult. Certain blogs try to fill that gap, but again are individually published and not typically associated with any professional body.

Professional development or education programs

We have degree programs, and indeed those usually have accredited curricula (the ACM has traditionally been very active in that field, and the BCS in the UK). But many of the degrees are Computer Science rather than Software Engineering, and do they teach QA, or systems administration, or project management, or human-computer interaction? Are there vocational courses in those topics? Are they well-regarded: by potential students, by potential employers, by the public?

And then there are vendor certifications.

February 03, 2023

Reading List 300 by Bruce Lawson (@brucel)

Wow, 300 Reading Lists!

- Link o’ The Month: The Web Platform Is Back – Adobe writes “many of us now think that requiring Web frameworks on top of the standards was a transition phase, which is coming to its end as the Web Platform reaches a new level of maturity … Staying close to the Web Platform, without adding more than strictly needed on top of it, will help us create efficient and durable Web sites and applications. That’s the approach we are taking for upcoming tools”

- Related: Speed for who? – Andy Bell, a Northerner, gets righteously grumpy

- Three attributes for better web forms by Jeremy Keith. Try it out with this mobile form simulator

- (Almost) everything about storing data on the web (actually, storing data in the browser)

- The truth about CSS selector performance

- Blind news audiences are being left behind in the data visualisation revolution: here’s how we fix that

- There’s an AI for that – “AI use cases. Updated daily”

- Vivaldi Social on Mastodon: A Step-by-Step Tutorial – I’m using Viviadi’s mastodon instance, as I trust my old Opera chums to keep it stable and keep my data safe. Ruari’s tutorial is largely applicable to all instances, however.

- App stores, antitrust and their links to net neutrality: A review of the European policy and academic debate leading to the EU Digital Markets Act

- The IAB loves tracking users. But it hates users tracking them. – shady shenanigans from the Interactive Advertising Bureau. By Tezza.

February 02, 2023

The NTIA report on mobile app ecosystems by Bruce Lawson (@brucel)

The National Telecommunications and Information Administration (part of US Dept of Commerce) Mobile App Competition Report came out yesterday (1 Feb). Along with fellow Open Web Advocacy chums, I briefed them and also personally commented.

Key Policy Issue #1

Consumers largely can’t get apps outside of the app store model, controlled by Apple and Google. This means innovators have very limited avenues for reaching consumers.

Key Policy Issue #2

Apple and Google create hurdles for developers to compete for consumers by imposing technical limits, such as restricting how apps can function or requiring developers to go through slow and opaque review processes.

The report

I’m very glad that, like other regulators, we’ve helped them understand that there’s not a binary choice between single-platform iOS and Android “native” apps, but Web Apps (AKA “PWA”, Progressive Web Apps) offer a cheaper to produce alternative, as they use universal, mature web technologies:

Web apps can be optimized for design, with artfully crafted animations and widgets; they can also be optimized for unique connectivity constraints, offering users either a download-as-you go experience for low-bandwidth environments, or an offline mode if needed.

However, the mobile duopoly restrict the installation of web apps, favouring their own default browsers:

commenters contend that the major mobile operating system platforms—both of which derive revenue from native app downloads through their mobile app stores & whose own browsers derive significant advertising revenue—have acted to stifle implementation & distribution of web apps

NTIA recognises that this is a deliberate choice by Apple and (to a lesser extent) by Google:

developers face significant hurdles to get a chance to compete for users in the ecosystem, and these hurdles are due to corporate choices rather than technical necessities

NTIA explicitly calls out the Apple Browser Ban:

any web browser downloaded from Apple’s mobile app store runs on WebKit. This means that the browsers that users recognize elsewhere—on Android and on desktop computers—do not have the same functionality that they do on those other platforms.

It notes that WebKit has implemented fewer features that allow Web Apps to have similar capabilities as its iOS single-platform Apps, and lists some of the most important with the dates they were available in Gecko and Blink, continuing

According to commenters, lack of support for these features would be a more acceptable condition if Apple allowed other, more robust, and full-featured browser engines on its operating system. Then, iOS users would be free to choose between Safari’s less feature-rich experience (which might have other benefits, such as privacy and security features), and the broader capabilities of competing browsers (which might have other borrowers costs, such as greater drain on system resources and need to adjust more settings). Instead, iOS users are never given the opportunity to choose meaningfully differentiated browsers and experience features that are common for Android users—some of which have been available for over a decade.

Regardless of Apple’s claims that the Apple Browser Ban is to protect their users,

Multiple commenters note that the only obvious beneficiary of Apple’s WebKit restrictions is Apple itself, which derives significant revenue from its mobile app store commissions

The report concludes that

Congress should enact laws and relevant agencies should consider measures [aimed at] Getting platforms to allow installation and full functionality of third-party web browsers. To allow web browsers to be competitive, as discussed above, the platforms would need to allow installation and full functionality of the third-party web browsers. This would require platforms to permit third-party browsers a comparable level of integration with device and operating system functionality. As with other measures, it would be important to construct this to allow platform providers to implement reasonable restrictions in order to protect user privacy, security, and safety.

The NTIA joins the Australian, EU, and UK regulators in suggesting that the Apple Browser Ban stifles competition and must be curtailed.

The question now is whether Apple will do the right thing, or seek to hurl lawyers with procedural arguments at it instead, as they’re doing in the UK now. It’s rumoured that Apple might be contemplating about thinking about speculating about considering opening up iOS to alternate browsers for when the EU Digital Markets Act comes into force in 2024. But for every month they delay, they earn a fortune; it’s estimated that Google pays Apple $20 Billion to be the default search engine in Safari, and the App Store earned Apple $72.3 Billion in 2020 – sums which easily pay for snazzy lawyers, iPads for influencers, salaries for Safari shills, and Kool Aid for WebKit wafflers.

Place your bets!

January 30, 2023

In 1701, Asano Naganori, a feudal lord in Japan, was summoned to the shogun’s court in Edo, the town now called Tokyo. He was a provincial chieftain, and knew little about court etiquette, and the etiquette master of the court, Kira Kozuke-no-Suke, took offence. It’s not exactly clear why; it’s suggested that Asano didn’t bribe Kira sufficiently or at all, or that Kira felt that Asano should have shown more deference. Whatever the reasoning, Kira ridiculed Asano in the shogun’s presence, and Asano defended his honour by attacking Kira with a dagger.

Baring steel in the shogun’s castle was a grievous offence, and the shogun commanded Asano to atone through suicide. Asano obeyed, faithful to his overlord. The shogun further commanded that Asano’s retainers, over 300 samurai, were to be dispossessed and made leaderless, and forbade those retainers from taking revenge on Kira so as to prevent an escalating cycle of bloodshed. The leader of those samurai offered to divide Asano’s wealth between all of them, but this was a test. Those who took him up on the offer were paid and told to leave. Forty-seven refused this offer, knowing it to be honourless, and those remaining 47 reported to the shogun that they disavowed any loyalty to their dead lord. The shogun made them rōnin, masterless samurai, and required that they disperse. Before they did, they swore a secret oath among themselves that one day they would return and avenge their master. Then each went their separate ways. These 47 rōnin immersed themselves into the population, seemingly forgoing any desire for revenge, and acting without honour to indicate that they no longer followed their code. The shogun sent spies to monitor the actions of the rōnin, to ensure that their unworthy behaviour was not a trick, but their dishonour continued for a month, two, three. For a year and a half each acted dissolutely, appallingly; drunkards and criminals all, as their swords went to rust and their reputations the same.

A year and a half later, the forty-seven rōnin gathered together again. They subdued or killed and wounded Kira’s guards, they found a secret passage hidden behind a scroll, and in the hidden courtyard they found Kira and demanded that he die by suicide to satisfy their lord’s honour. When the etiquette master refused, the rōnin cut off Kira’s head and laid it on Asano’s grave. Then they came to the shogun, surrounded by a public in awe of their actions, and confessed. The shogun considered having them executed as criminals but instead required that they too die by suicide, and the rōnin obeyed. They were buried, all except one who was not present and who lived on, in front of the tomb of their master. The tombs are a place to be visited even today, and the story of the 47 rōnin is a famous one both inside and outside Japan.

You might think: why have I been told this story? Well, there were 47 of them. 47 is a good number. It’s the atomic number of silver, which is interesting stuff; the most electrically conductive metal. (During World War II, the Manhattan Project couldn’t get enough copper for the miles of wiring they needed because it was going elsewhere for the war effort, so they took all the silver out of Fort Knox and melted it down to make wire instead.) It’s strictly non-palindromic, which means that it’s not only not a palindrome, it remains not a palindrome in any base smaller than itself. And it’s how old I am today.

Yes! It’s my birthday! Hooray!

I have had a good birthday this year. The family and I had delightful Greek dinner at Mythos in the Arcadian, and then yesterday a bunch of us went to the pub and had an absolute whale of an afternoon and evening, during which I became heartily intoxicated and wore a bag on my head like Lord Farrow, among other things. And I got a picture of the Solvay Conference from Bruce.

This year is shaping up well; I have some interesting projects coming up, including one will-be-public thing that I’ve been working on and which I’ll be revealing more about in due course, a much-delayed family thing is very near its end (finally!), and in general it’s just gotta be better than the ongoing car crash that the last few years have been. Fingers crossed; ask me again in twelve months, anyway. I’ve been writing these little posts for 21 years now (last year has more links) and there have been ups and downs, but this year I feel quite hopeful about the future for the first time in a while. This is good news. Happy birthday, me.

January 27, 2023

Trying the PICO-8 by James Nutt (@zerosumjames)

I got myself a little present for Christmas. The PICO-8. The PICO-8 is a fantasy console, which is an emulator for a console that doesn’t exist. The PICO-8 comes with its own development and runtime environment, packaged into a single slick application with a beautiful aesthetic.

![]()

It also comes with a pretty strict set of constraints in which to work your magic.

Display: 128x128 16 colours

Cartridge size: 32k

Sound: 4 channel chip blerps (I assume this is an industry term)

Code: P8 Lua

CPU: 4M vm insts/sec

Sprites: 256 8x8 sprites

Map: 128x32 tiles

The constraints are appealing. Modern development at big companies sometimes seems like being at an all-you-can-eat buffet with the company credit card. Run out of CPU? Your boss can fix that with whatever the best new MacBook is. Webserver process eating RAM like candy? Doesn’t matter, that’s what automatic load balancers and infinite horizontal scaling is for.

With the PICO-8, there appears to be no such negotiation. There’s something liberating about this. By putting firm limits on the scope of what you can create, you know when to stop. If you hit the limit, you know you have to either admit that the project is as done as it’s going to get, or you need to refine or remove something that’s already there. Infinite potential is both a luxury and a curse.

What you get is what you get, and what you get is enough for a wide community of enthusiasts to create some beautiful and entertaining games that you can play directly in the browser, in your own copy of PICO-8, or on one of several fan-made hardware solutions.

My favourite feature is actually secondary to the main function of the console. Cartridges can be exported as PNG files, with game data steganographically hidden within. Each one of the below files is a playable cartridge that can be loaded into the PICO-8 console.

There’s something tactile that didn’t fully transfer from cartridge to CD and definitely didn’t transfer from CD to digital download. You can’t quite argue that a folder of PNGs isn’t a digital download, but somewhere in the dusty corners of my memory, I recall the sound of plastic rattling against plastic and a long day of zero responsibility ahead.

January 23, 2023

Apple: we’re so great for consumers we want to block investigation that could vindicate us by Bruce Lawson (@brucel)

Regular readers to this chucklefest will recall that I’ve been involved with briefing competition regulators in UK, US, Australia, Japan and EU about the Apple Browser Ban – Apple’s anti-competitive requirement that anything that can browse the web on iOS/iPad must use its WebKit engine. This allows Apple to stop web apps becoming as feature-rich as its iOS apps, for which it can charge a massive fee for listing in its monopoly App Store.

The UK’s Competition and Markets Authority recently announced a market investigation reference (MIR) into the markets for mobile browsers (particularly browser engines). The CMA may decide to make a MIR when it has reasonable grounds for suspecting that a feature or combination of features of a market or markets in the UK prevents, restricts, or distorts competition (PDF).

You would imagine that Apple would welcome this opportunity to be scrutinised, given that Apple told CMA (PDF) that

By integrating WebKit into iOS, Apple is able to guarantee robust user privacy protections for every browsing experience on iOS device… . WebKit has also been carefully designed and optimized for use on iOS devices. This allows iOS devices to outperform competitors on web-based browsing benchmarks… Mandating Apple to allow apps to use third-party rendering engines on iOS, as proposed by the IR, would break the integrated security model of iOS devices, reduce their privacy and performance, and ultimately harm competition between iOS and Android devices.

Yet despite Apple’s assertion that it is simply the best, better than all the rest, it is weirdly reluctant to see the CMA investigate it. You would assume that Apple are confident that it would be vindicated by CMA as better than anyone, anyone they’ve ever met. Yet Apple applied to the Competition Appeal Tribunal (PDF, of course), seeking

1. An Order that the MIR Decision is quashed.

2. A declaration that the MIR Decision and market investigation purportedly launched by

reference to it are invalid and of no legal effect.

In its Notice of Application, Apple also seeks interim relief in the form of a stay of the market investigation pending determination of the application.

Why would this be? I don’t know (I seem no longer to be on not-Steve’s Xmas card list). But it’s interesting to note that a CMA Market Investigation can have real teeth. It has previously forced forced the sale of airports and hospitals (gosh! A PDF) in other sectors.

A market investigation lowers the hurdle for the CMA: it doesn’t have to prove wrongdoing, just adverse effects on competition (abbreviated as AEC, which in other antitrust jurisdictions, however, stands for “as efficient competitor”) and has greater powers to impose remedies. Otherwise a conventional antitrust investigation of Apple’s conduct would be required, and Apple would have to be shown to have abused a dominant position in the relevant market. Apple would like to deprive the CMA of its more powerful tool, and essentially argues that the CMA has deprived itself of that tool by failing to abide by the applicable statute.

It’s rumoured that Apple might be contemplating about thinking about speculating about considering opening up iOS to alternate browsers for when the EU Digital Markets Act comes into force in 2024. But for every month they delay, they earn a fortune; it’s estimated that Google pays Apple $20 Billion to be the default search engine in Safari, and the App Store earned Apple $72.3 Billion in 2020 – sums which easily pay for snazzy lawyers, iPads for influencers, salaries for Safari shills, and Kool Aid for WebKit wafflers.

(Last Updated on )

January 16, 2023

Low-stakes conspiracy theory: they were invented by word processing marketers to justify spell-check features that weren’t necessary.

Evidence: the Oxford English Dictionary (Oxford being in Britain) entry for “-ise” suffix’s first sense is “A frequent spelling of -ize suffix, suffix forming verbs, which see.” So in a British dictionary, -ize is preferred. But in a computer, I have to change my whole hecking country to be able to write that!

January 13, 2023

Reading List 299 by Bruce Lawson (@brucel)

Due to annoyances in the economy (thanks, Putin, and Liz Truss) I find myself once again on the jobs market. Read my LinkTin C.V. thingie, then hire me to make your digital products more accessible, faster and full of standardsy goodness!

- A CSS challenge: skewed highlight by my old chum Vadim Makeev.

- What does it look like for the web to lose? by Chris Coyier

- Our top Core Web Vitals recommendations for 2023 “A collection of the best practices that the Chrome DevRel team believes are the most effective ways to improve Core Web Vitals performance in 2023.”

- Counting unique visitors without using cookies, UIDs or fingerprinting – “Building a web analytics service without cookies poses a tricky problem: How do you distinguish unique visitors?”

- Top 5 Accessibility Issues in 2022 – the old ones are the worst

- Component library accessibility audit – some interesting points about the difficulty of auditing individual components that are out of context

- A Future Where XR is Born Accessible – “This case study provides an in-depth look at the steps taken to launch and sustain the Initiative in its first year of operation and outlines how XR Access is evolving and laying the groundwork for its next stage of maturity.”

- How Indie Studios Are Pioneering Accessible Game Design – Smaller shops prove that you don’t need a AAA budget to create games for everyone.

- Setup iPhone or iPad for iOS mobile Accessibility testing – a 12 minute video

- Things CSS Could Still Use Heading Into 2023 by Chris Coyier

- Web apps could de-monopolize mobile devices writes Cory Doctorow

- Welcome to this course on Prompt Engineering! – “I like to think of Prompt Engineering (PE) as “How to talk to AI to get it to do what you want”.”

- Teachable Machine – “Train a computer to recognize your own images, sounds, & poses. A fast, easy way to create machine learning models for your sites, apps, and more – no expertise or coding required.”

- What Did We Get Stuck In Our Rectums Last Year? “All reports are taken from the U.S. Consumer Product Safety Commission’s database of emergency room visits” – also features all other orifices.

In March, I shall be keynoting at axe-con with a talk called Whose web is it, anyway?. It’s free.

January 02, 2023

What to do about hotlinking by Stuart Langridge (@sil)

Hotlinking, in the context I want to discuss here, is the act of using a resource on your website by linking to it on someone else’s website. This might be any resource: a script, an image, anything that is referenced by URL.

It’s a bit of an anti-social practice, to be honest. Essentially, you’re offloading the responsibility for the bandwidth of serving that resource to someone else, but it’s your site and your users who get the benefit of that. That’s not all that nice.

Now, if the “other person’s website” is a CDN — that is, a site deliberately set up in order to serve resources to someone else — then that’s different. There are many CDNs, and using resources served from them is not a bad thing. That’s not what I’m talking about. But if you’re including something direct from someone else’s not-a-CDN site, then… what, if anything, should the owner of that site do about it?

I’ve got a fairly popular, small, piece of JavaScript: sorttable.js, which makes an HTML table be sortable by clicking on the headers. It’s existed for a long time now (the very first version was written twenty years ago!) and I get an email about it once a week or so from people looking to customise how it works or ask questions about how to do a thing they want. It’s open source, and I encourage people to use it; it’s deliberately designed to be simple1, because the target audience is really people who aren’t hugely experienced with web development and who can add sortability to their HTML tables with a couple of lines of code.

The instructions for sorttable are pretty clear: download the library, then put it in your web space and include it. However, some sites skip that first step, and instead just link directly to the copy on my website with a <script> element. Having looked at my bandwidth usage recently, this happens quite a lot2, and on some quite high-profile sites. I’m not going to name and shame anyone3, but I’d quite like to encourage people to not do that, if there’s a way to do it. So I’ve been thinking about ways that I might discourage hotlinking the script directly, while doing so in a reasonable and humane fashion. I’m also interested in suggestions: hit me up on Mastodon at @sil@mastodon.social or Twitter4 as @sil.

Move the script to a different URL

This is the obvious thing to do: I move the script and update my page to link to the new location, so anyone coming to my page to get the script will be wholly unaffected and unaware I did it. I do not want to do this, for two big reasons: it’s kicking the can down the road, and it’s unfriendly.

It’s can-kicking because it doesn’t actually solve the problem: if I do nothing else to discourage the practice of hotlinking, then a few years from now I’ll have people hotlinking to the new location and I’ll have to do it again. OK, that’s not exactly a lot of work, but it’s still not a great answer.

But more importantly, it’s unfriendly. If I do that, I’ll be deliberately breaking everyone who’s hotlinking the script. You might think that they deserve it, but it’s not actually them who feel the effect; it’s their users. And their users didn’t do it. One of the big motives behind the web’s general underlying principle of “don’t break the web” is that it’s not reasonable to punish a site’s users for the bad actions of the site’s creators. This applies to browsers, to libraries, to websites, the whole lot. I would like to find a less harsh method than this.

Move the script to a different dynamic URL

That is: do the above, but link to a URL which changes automatically every month or every minute or something. The reason that I don’t want to do this (apart from the unfriendly one from above, which still applies even though this fixes the can-kicking) is that this requires server collusion; I’d need to make my main page be dynamic in some way, so that links to the script also update along with the script name change. This involves faffery with cron jobs, or turning the existing static HTML page into a server-generated page, both of which are annoying. I know how to do this, but it feels like an inelegant solution; this isn’t really a technical problem, it’s a social one, where developers are doing an anti-social thing. Attempting to solve social problems with technical measures is pretty much always a bad idea, and so it is in this case.

Contact the highest-profile site developers about it

I’m leaning in this direction. I’m OK with smaller sites hotlinking (well, I’m not really, but I’m prepared to handwave it; I made the script and made it easy to use exactly to help people, and if a small part of that general donation to the universe includes me providing bandwidth for it, then I can live with that). The issue here is that it’s not always easy to tell who those heavy-bandwidth-consuming sites are. It relies on the referrer being provided, which it isn’t always. It’s also a bit more work on my part, because I would want to send an email saying “hey, Site X developers, you’re hotlinking my script as you can see on page sitex.example.com/sometable.html and it would be nice if you didn’t do that”, but I have no good way of identifying those pages; the document referrer isn’t always that specific. If I send an email saying “you’re hotlinking my script somewhere, who knows where, please don’t do that” then the site developers are quite likely to put this request at the very bottom of their list, and I don’t blame them.

Move the script and maliciously break the old one

This is: I move the script somewhere else and update my links, and then I change the previous URL to be the same script but it does something like barf a complaint into the console log, or (in extreme cases based on suggestions I’ve had) pops up an alert box or does something equally obnoxious. Obviously, I don’t wanna do this.

Legal-ish things

That is: contact the highest profile users, but instead of being conciliatory, be threatening. “You’re hotlinking this, stop doing it, or pay the Hotlink Licence Fee which is one cent per user per day” or similar. I think the people who suggest this sort of thing (and the previous malicious approach) must have had another website do something terrible to them in a previous life or something and now are out for revenge. I liked John Wick as much as the next poorly-socialised revenge-fantasy tech nerd, but he’s not a good model for collaborative software development, y’know?

Put the page (or whole site) behind a CDN

I could put the site behind Cloudflare (or perhaps a better, less troubling CDN) and then not worry about it; it’s not my bandwidth then, it’s theirs, and they’re fine with it. This used to be the case, but recently I moved web hosts5 and stepped away from Cloudflare in so doing. While this would work… it feels like giving up, a bit. I’m not actually solving the problem, I’m just giving it to someone else who is OK with it.

Live with it

This isn’t overrunning my bandwidth allocation or anything. I’m not actually affected by this. My complaint isn’t important; it’s more a sort of distaste for the process. I’d like to make this better, rather than ignoring it, even if ignoring it doesn’t mean much, as long as I’m not put to more inconvenience by fixing it. We want things to be better, after all, not simply tolerable.

So… what do you think, gentle reader? What would you do about it? Answers on a postcard.

- and will stay simple; I’d rather sorttable were simple and relatively bulletproof than comprehensive and complicated. This also explains why it’s not written in very “modern” JS style; the best assurance I have that it works in old browsers that are hard to test in now is that it DID work in them and I haven’t changed it much ↩

- in the last two weeks I’ve had about 200,000 hits on sorttable.js from sites that hotlink it, which ain’t nothin’ ↩

- yet, at least, so don’t ask ↩

- if you must ↩

- to the excellent Mythic Beasts, who are way better than the previous hosts ↩

December 20, 2022

Bad performance is bad accessibility by Bruce Lawson (@brucel)

What is “accessibility”? For some, it’s about ensuring that your sites and apps don’t block people with disabilities from completing tasks. That’s the main part of it, but in my opinion it’s not all of the story. Accessibility, to me, means taking care to develop digital services that are inclusive as possible. That means inclusive of people with disabilities, of people outside Euro-centric cultures, and people who don’t have expensive, top-the-range hardware and always-on cheap fast networks.

In his closely argued post The Performance Inequality Gap, 2023, Alex Russell notes that “When digital is society’s default, slow is exclusionary”, and continues

sites continue to send more script than is reasonable for 80+% of the world’s users, widening the gap between the haves and the have-nots. This is an ethical crisis for the frontend.

Big Al goes on to suggest that in order to reach interactivity in less than 5 seconds on first load, we should send no more that ~150KiB of HTML, CSS, images, and render-blocking font resources, and no more than ~300-350KiB of JavaScript. (If you want to know the reasoning behind this, Alex meticulously cites his sources in the article; read it!)

Now, I’m not saying this is impossible using modern frameworks and tooling (React, Next.js etc) that optimise for good “developer experience”. But it is a damned sight harder, because such tooling prioritises developer experience over user experience.

In January, I’ll be back on the jobs market (here’s my LinkTin resumé!) so I’ve been looking at what’s available. Today I saw a job for a Front End lead who will “write the first lines of front end code and set the tone for how the team approaches user-facing software development”. The job spec requires a “bias towards solving problems in simple, elegant ways”, and the candidate should be “confident building with…reliability and accessibility in mind”. Yet, weirdly, even though the first lines of code are yet to be written, it seems the tech stack is already decided upon: React and Next.js.

As Alex’s post shows, such tooling conspires against simplicity and elegance, and certainly against reliability and accessibility. To repeat his message:

When digital is society’s default, slow is exclusionary

Bad performance is bad accessibility.

December 19, 2022

Reading List 298 by Bruce Lawson (@brucel)

- The UK competition regulator just published its statement of issues (PDF, 17 pp) for its new Mobile browsers and cloud gaming market investigation.

- Apple is reportedly preparing to allow third-party app stores on the iPhone – let’s see; this is “reportedly”, and nothing about PWA-capable 3rd party browser engines on iThings. But it’s the sweet smell of #appleBrowserBan change blowing in the wind.

- The Web’s Next Transition – Kent C. Dodds thinks it is “Progressively Enhanced Single Page Apps”. Progressive Enhancement? Yay. Single Page Apps? Meh. Talking of which…

- Shared Element Transitions is now View Transitions and when smooth and simple transitions with the View Transitions API are shipping for multi-page apps, we’ll be able to get the snappy feel of single page apps, without all the crap and accessibility problems. Yay!

- Access & Use shows what it needs to make dynamic elements in websites accessible and usable for all.

- Introducing Codux – a new visual IDE for easing and accelerating the development of React projects from Wix. (I worked on this team).

- Shadow DOM and accessibility: the trouble with ARIA and how the Accessibility Object Model solves the problem

- You Don’t Need ARIA For That – “ARIA usage certainly has its place. But overall, reduced usage of ARIA will, ironically, greatly increase accessibility.”

- Making microservices accessible

- Tab order in CSS Masonry – Chromium discussion on what the order should be

- Meta is being sued for $2 billion for exacerbating Ethiopia’s civil war -Facebook’s algorithm helped fuel the viral spread of hate and violence during Ethiopia’s civil war, a legal case alleges.”

- How I set up a Twitter archive with Tweetback – flame-haired FOSS adonis Stuart Langridge has step-by-step instructions. Needs you to be able to do stuff in Terminal.

- Make your own simple, public, searchable Twitter archive – download your archive from twitter, this tool processes the zip file (on your local machine), upload the resulting html pages to your archive URL. Simpler than using Tweetback, but less customisable.

- And finally: Musk-era Twitter emulator

(Last Updated on )

How I set up a Twitter archive with Tweetback by Stuart Langridge (@sil)

Twitter currently has problems. Well, one specific problem, which is the bloke who bought it. My solution to this problem has been to move to Mastodon (@sil@mastodon.social if you want to do the same), but I’ve invested fifteen years of my life providing twitter.com with free content so I don’t really want it to go away. Since there’s a chance that the whole site might vanish, or that it continues on its current journey until I don’t even want my name associated with it any more, it makes sense to have a backup. And obviously, I don’t want all that lovely writing to disappear from the web (how would you all cope without me complaining about some random pub’s music in 2011?!), so I wanted to have that backup published somewhere I control… by which I mean my own website.

So, it would be nice to be able to download a list of all my tweets, and then turn that into some sort of website so it’s all still available and published by me.

Fortunately, Zach Leatherman came to save us by building a tool, Tweetback, which does a lot of the heavy lifting on this. Nice one, that man. Here I’ll describe how I used Tweetback to set up my own personal Twitter archive. This is unavoidably a bit of a developer-ish process, involving the Terminal and so on; if you’re not at least a little comfortable with doing that, this might not be for you.

Step 1: get a backup from Twitter

This part is mandatory. Twitter graciously permit you to download a big list of all the tweets you’ve given them over the years, and you’ll need it for this. As they describe in their help page, go to your Twitter account settings and choose Your account > Download an archive of your data. You’ll have to confirm your identity and then say Request data. They then go away and start constructing an archive of all your Twitter stuff. This can take a couple of days; they send you an email when it’s done, and you can follow the link in that email to download a zip file. This is your Twitter backup; it contains all your tweets (and some other stuff). Stash it somewhere; you’ll need a file from it shortly.

Step 2: get the Tweetback code

You’ll need both node.js and git installed to do this. If you don’t have node.js, go to nodejs.org and follow their instructions for how to download and install it for your computer. (This process can be fiddly; sorry about that. I suspect that most people reading this will already have node installed, but if you don’t, hopefully you can manage it.) You’ll also need git installed: Github have some instructions on how to install git or Github Desktop, which should explain how to do this stuff if you don’t already have it set up.

Now, you need to clone the Tweetback repository from Github. On the command line, this looks like git clone https://github.com/tweetback/tweetback.git; if you’re using Github Desktop, follow their instructions to clone a repository. This should give you the Tweetback code, in a folder on your computer. Make a note of where that folder is.

Step 3: install the Tweetback code

Open a Terminal on your machine and cd into the Tweetback folder, wherever you put it. Now, run npm install to install all of Tweetback’s dependencies. Since you have node.js installed from above, this ought to just work. If it doesn’t… you get to debug a bit. Sorry about that. This should end up looking something like this:

$ npm install

npm WARN deprecated @npmcli/move-file@1.1.2: This functionality has been moved to @npmcli/fs

added 347 packages, and audited 348 packages in 30s

52 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

Step 4: configure Tweetback with your tweet archive

From here, you’re following Tweetback’s own README instructions: they’re online at https://github.com/tweetback/tweetback#usage and also are in the README file in your current directory.

Open up the zip file you downloaded from Twitter, and get the data/tweets.js file from it. Put that in the database folder in your Tweetback folder, then edit that file to change window.YTD.tweet.part0 on the first line to module.exports, as the README says. This means that your database/tweets.js file will now have the first couple of lines look like this:

module.exports = [

{

"tweet" : {